Dictionary

Aims to balance the color temperature in image acquisition by adding the opposite color to the image in an attempt to bring the color temperature back to neutral.

Triggering system is a camera feature that gives the possibility to control the beginning of the acquisition process.

Cameras can be set up to start acquisition after receiving input from an external device (e.g. position sensors, encoders).

This technique is essential when taking images of moving objects to ensure that the features of interest are in the field of view of the imaging system.

Thresholding starts with setting or determining a gray value that will be useful for the following steps.

The value is then used to separate portions of the image, and sometimes to transform each portion of the image to simply black and white based on whether it is below or above that grayscale value.

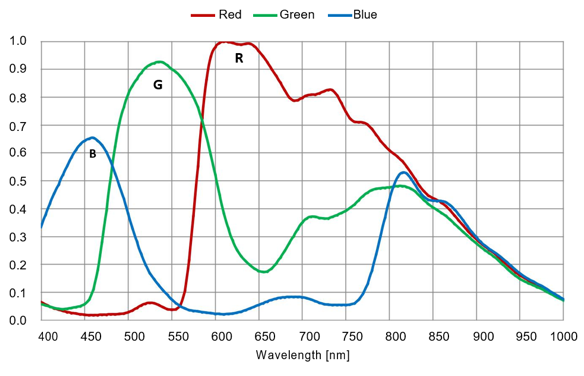

Is a parameter describing how efficiently light intensity is registered at different wavelengths.

Is a parameter describing how efficiently light intensity is registered at different wavelengths.

Machine vision systems, usually based on CCD or CMOS cameras, detect light from 350 to 900 nm, with the peak zone being between 400 and 650 nm.

Different kinds of sensors can also cover the UV spectrum or, on the opposite side, Near Infrared Light, before going to drastically different technology for far wavelengths such as SWIR or LWIR.

The signal-to-noise ratio (often abbreviated SNR or S/N) is the ratio between the maximum signal and the overall noise, measured in dB.

The maximum value for SNR is limited by shot noise (that depends on the physical nature of light, and this is inevitable) and can be approximated as

![]()

SNR limits the meaningful grey levels in the conversion between the analog signal (continuous) and the digital one (discrete).

In machine vision, the shutter speed is the time for which the shutter is held open during the taking of an image to allow light to reach the imaging sensor. In combination with variation of the lens aperture, this regulates how much light the imaging sensor in a digital camera will receive.

A shutter is a device that allows light to pass for a determined time, in order to expose the image sensor to the right amount of light to create a permanent image of a view.

It is a direct consequence of the nature of light. Shot noise can occur when light intensity is very low: as it is considering the small surface of a single pixel, the relative fluctuation of the number of photons in time will be significant.

This feature allows you to change camera configurations during burst acquisition.

Alkeria Advanced Sequencer mechanism allows users to have up to 64 different video settings to be cycled to a succession of subsequent frames.

The user will be able to move the ROI in the field of view, controlling different light sources and changing acquisition parameters for each frame to perform multiple and complex inspections in a single target passage.

An electronic device containing a large number of small light-sensitive areas (pixels), where photons generate electric charges that are transformed into an electric signal.

Sensitivity is a parameter that quantifies how the sensor responds to light. It is strictly connected to quantum efficiency.

Partitioning a digital image into multiple segments to simplify and/or change the representation of an image into something more meaningful and easier to analyze.

Software Development Kit

RoHS (Restriction of Hazardous substances) regulates the usage of environmentally hazardous substances in products, e.g. lead, cadmium, hexavalent chromium, mercury, polybrominated biphenyls, and polybrominated diphenyl ether.

The RGB color model utilizes the additive model in which red, green, and blue light are combined in various ways to create other colors.

Defines the sensor's area used to capture the image.

It can be customized by users in its position on the sensor and in a number of included pixel lines and rows.

During acquisition, only the pixel information within the specified area is transmitted out of the camera.

This is recommended when the key information being sought can be found in a smaller portion of the image.

A smaller ROI leads in general to higher maximum frame rates, since resulting images are smaller than the maximum sensor resolution: they are handled and processed faster while data volumes are kept smaller.

The percentage of photons effectively converted into electrons at a given wavelength.

This kind of noise is related to the conversion of the continuous value of analog voltage value to the discrete value of digital voltage.

Pixel defects can be of three kinds: hot, warm, and dead pixels.

Hot pixels are elements that always saturate (give the maximum signal, e.g. full white) whichever the light intensity is.

Dead pixels behave the opposite, always giving a zero (black) signal.

Warm pixels produce a random signal. These kinds of defects are independent of the intensity and exposure time, so they can be easily removed – e.g. by digitally substituting them with the average value of the surrounding pixels.

A pixel is one of the many tiny dots that make up the representation of a picture in a computer's memory or screen.

Describes the ability of a system to distinguish, detect, and/or record physical details by electromagnetic means.

The system may be imaging (e.g., a camera) or non-imaging (e.g., a quad-cell laser detector).

Original Equipment Manufacturer (OEM): it manufactures products or components then purchased by a company and retailed under that purchasing company's brand name.

OEM refers to the company that originally manufactured the product.

Image noise is a random variation of brightness or color information in images, and is usually an aspect of electronic noise.

They can be caused by either geometric, physical, or electronic factors, and they can be randomly distributed as well as constant.

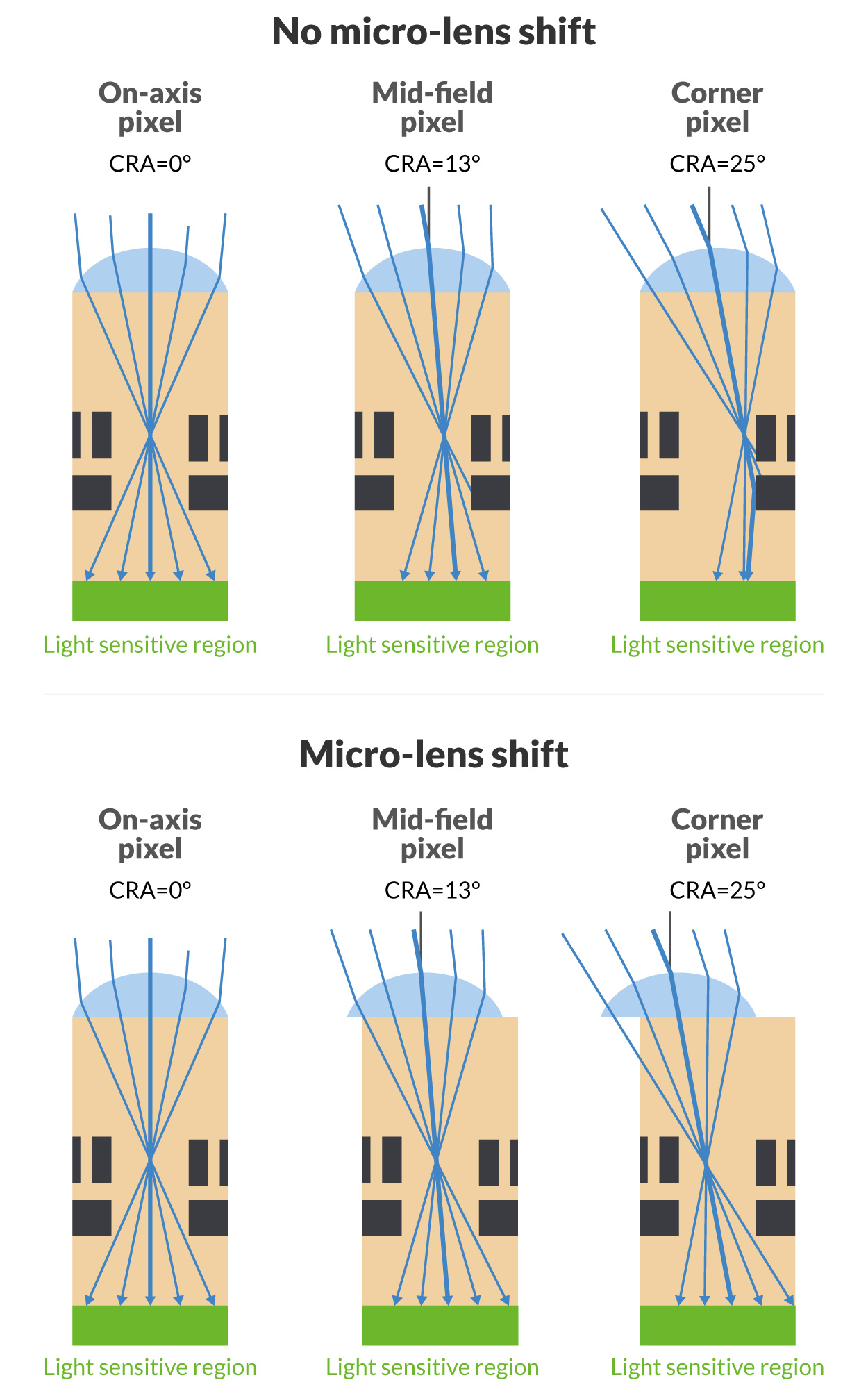

Micro-lenses are usually centered over each pixel's active area, regardless of their relative position on the sensor surface.

Micro-lenses are usually centered over each pixel's active area, regardless of their relative position on the sensor surface.

However, some sensors can be equipped with micro-lenses: these can be gradually shifted as we go from the center to the corner of the sensor: this helps to obtain better sensitivity uniformity over the sensor.

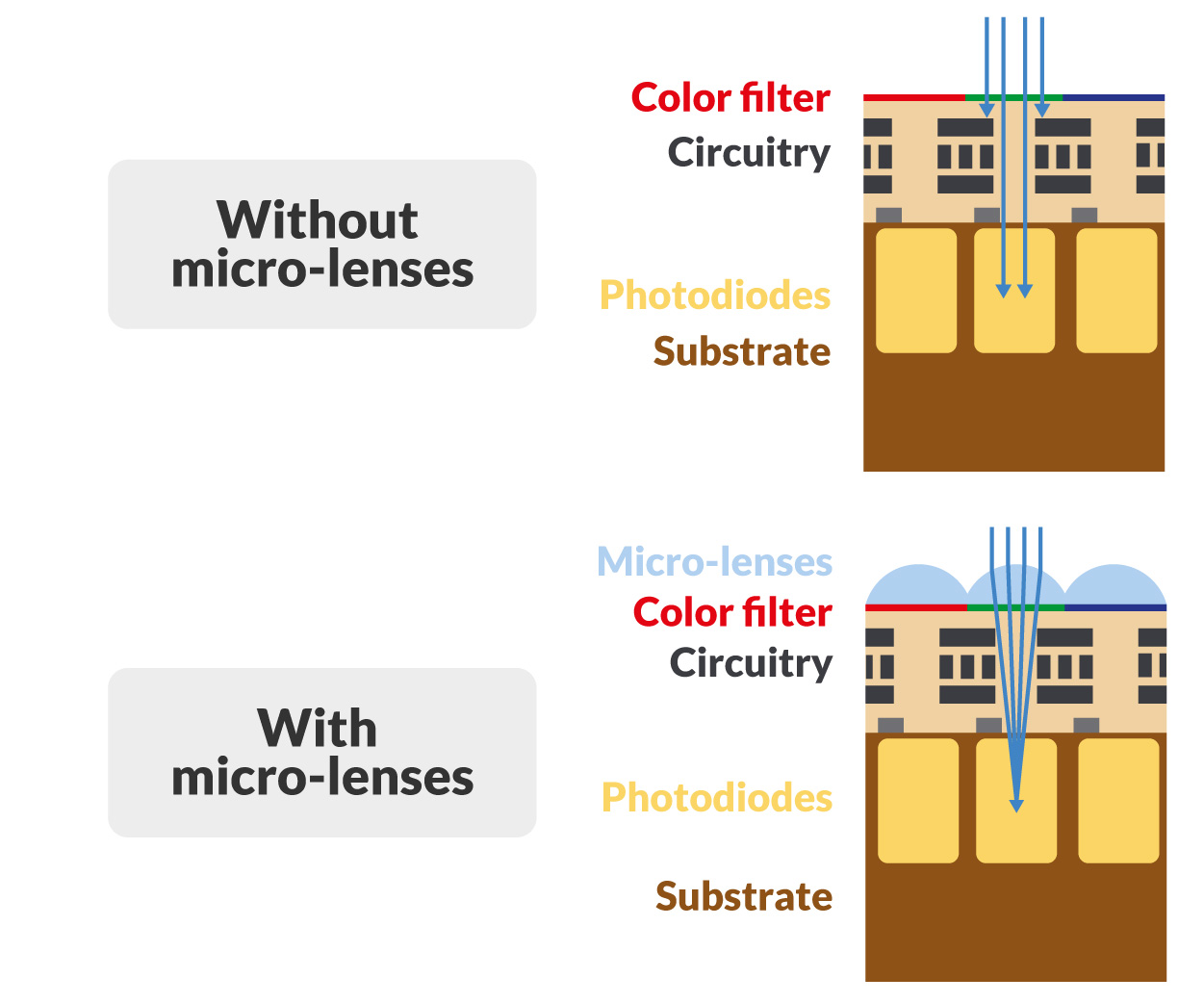

Especially on CMOS sensors, each pixel active area is surrounded and surmounted by circuitry and metal connections responsible for the image readout: this reduces the amount of light that can be successfully detected.

Especially on CMOS sensors, each pixel active area is surrounded and surmounted by circuitry and metal connections responsible for the image readout: this reduces the amount of light that can be successfully detected.

If the light rays are not perpendicular to the sensor surface, they could even be reflected by near interconnections on the metal layers of the sensor chip.

Almost all modern image sensors are coated with an array of micro-lenses. These lenses gather incident light and focus it on the sensitive area of the pixel, thus increasing sensitivity.

Metrology is the science of measurement. There are lots of applications for machine vision in metrology.

Line scan cameras have an image sensor consisting of 1 to 2-pixel lines.

In computer graphics and photography, a color histogram is a representation of the distribution of colors in an image, derived by counting the number of pixels of each of a given set of color ranges in a typical two-dimensional (2D) or three-dimensional (3D) color space.

A histogram is a standard statistical description of a distribution in terms of occurrence frequencies of different event classes; for color, the event classes are regions in color space.

This kind of noise is caused by the difference in the behavior of different pixels (in terms of sensitivity and gain). This is an example of ‘constant noise’ that can be measured and eliminated.

Gain is a measure of the ability to increase the amplitude of a signal from the input to the output port.

In a digital camera represents a way to increase the amount of signal collected by the image sensor. Increasing gain value gives brighter images, thus increasing image noise as well.

This index refers to the largest charge that a pixel can hold before overflowing to adjacent pixels, causing the so-called blooming.

Both, full well capacity and dark noise are decisive for the dynamic range of a sensor or camera.

Frame rate, measured in fps, describes the number of frames that are captured within a time unit.

Higher frame rates are recommended in case you have to capture fast movements without blur, thus providing a continuously high image quality. The frame rate must be adjusted in every application.

Frame Per Second. This is the unit of the frame rate. Frame rate, measured in fps, describes the number of frames that are captured within a time unit.

An image, or image point or region, is said to be in focus if light from object points is converged about as well as possible in the image; conversely, it is out of focus if the light is not well converged.

The border between these conditions is sometimes defined via a circle of confusion criterion.

A non-homogeneity in the image due to the mismatches across the different pixel circuitries.

An FPGA is a is an integrated circuit designed to be configured by a customer or a designer after manufacturing.

Many FPGAs can be reprogrammed to implement different logic functions, allowing flexible reconfigurable computing as performed in computer software.

The field of view (FOV) is the part that can be seen by the machine vision system at one moment.

The field of view depends on the lens of the system and from the working distance between the object and the camera.

A bayonet-type lens mount introduced by Nikon. The lens mount employs a flange-to-image plane distance of 46.5 mm (1.83 inches).

It’s the amount of time in which light is allowed to reach the sensor. Increasing exposure time allows you to get brighter images, but there are even some drawbacks: blur effects can appear when dealing with moving objects and noise always increases in longer exposure time.

With a longer exposure time, the object will be impressed on a number of different pixels, causing the well-known ‘motion blur’ effect.

Furthermore, long exposure times can lead to overexposure – namely, when a number of pixels reach maximum capacity and thus appear to be white, even if the light intensity on each pixel is actually different.

Radio Frequency Interference (RFI) is electromagnetic radiation that is emitted by electrical circuits carrying rapidly changing signals, as a by-product of their normal operation, and which causes unwanted signals (interference or noise) to be induced in other circuits.

ED marks the points in a digital image at which the luminous intensity changes sharply. It also marks the points of luminous intensity changes of an object or spatial-taxon silhouette.

Dynamic range is the ratio between the maximum and the minimum signal that a sensor can acquire.

It indicates the ability of a sensor to produce an image of an area that includes both very low light (shadowed) and full light situations simultaneously with minimum noise or interference.

It could be described even as the ratio of the largest signal to the smallest signal, which can be distinguished from noise, in an image.

Dynamic range is usually expressed by the logarithm of the min-max ratio, either in base-10 (decibel) or base-2 (doublings or stops).

In optics, particularly photography and machine vision, the depth of field (DOF) is the distance in front of and behind the subject which appears to be in focus.

A standard image sensor captures black-and-white images. Color images require the use of a color matrix.

The most frequently used matrix is the Bayer pattern, featured in a process known as Bayering: it involves the composition of a mosaic using the color pixels, although the color values for that mosaic are actually incomplete.

To produce a proper color image, those color values are reconstructed using a dedicated algorithm: this process is known as Debayering or Demosaicing.

Demosaicing can either be performed directly within the camera's firmware or afterward in post-production using the raw data.

This kind of noise is caused by electrons that can be randomly produced by the thermal effect. The number of thermal electrons, as well as the related noise, grows with temperature and exposure time.

In visual perception, contrast is the difference in visual properties that makes an object (or its representation in an image) distinguishable from other objects and the background.

The study and application of methods that allow computers to "understand" image content.

"White light" is commonly described by its color temperature. A traditional incandescent light source's color temperature is determined by comparing its hue with a theoretical, heated black-body radiator.

The lamp's color temperature is the temperature in kelvins at which the heated black-body radiator matches the hue of the lamp.

Image sensors are capable of delivering only grey values. To obtain full-color information, each pixel is covered by a color filter (Red, Green, or Blue) following an assigned pattern (e.g. Bayer pattern), allowing the pixel to deliver the gray value for the color of the related color filter.

If a value is not measured, it can be interpolated using the gray values delivered by the neighboring pixels.

An alternative to this technique is using a different sensor for each primary color.

Identify parts, products, and items using color, assess quality from color, and isolate features using color.